Adobe has given Premiere a major boost in AI powered tools, especially around masking and finding the right clips faster. The company also confirmed that it has dropped the “Pro” in Premiere, as it’s becoming a family of apps across desktop and mobile, and possibly a future web or companion product.

However, Premiere on desktop is still very much aimed at professional creators, but the update is designed to save time and make complex edits feel a lot more achievable. I just attended a demo of the latest features at AdobeMAX in Los Angeles, here’s what I saw:

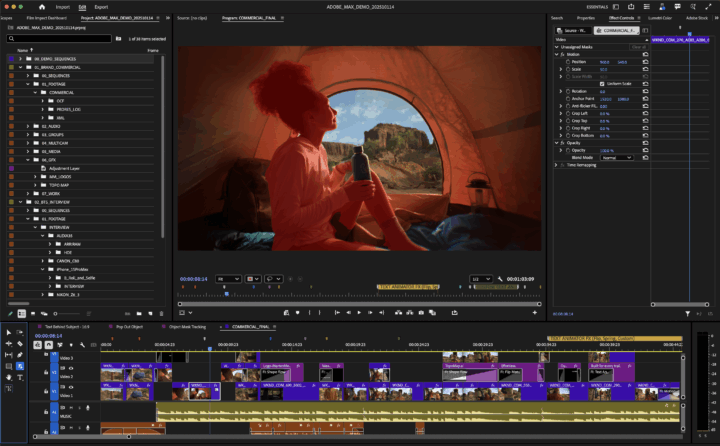

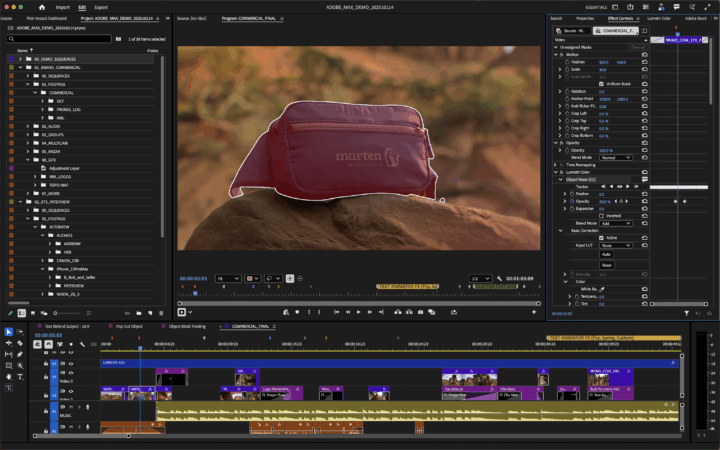

AI Object Mask is the headline upgrade

The new AI Object Mask can isolate people or objects in a shot automatically and track them accurately through complex motion. It replaces a lot of the manual rotoscoping that editors would normally need to spend hours on.

The masking engine under the hood has been completely rewritten with vector based tracking, which Adobe says is between four and twenty times faster depending on resolution. During the briefing, it worked cleanly and quickly on 4K Arri Alexa footage originally shot in LOG and colour converted.

Editors can:

- Apply masks that stick to clothing and body edges through movement

- Choose object or shape-based masks

- Remove backgrounds or isolate subjects quickly

- Add looks like sky replacements or character focused grading

- Track in both directions with a single click

All masks now support different blend modes, so you can add or subtract shapes to fine tune the area correctly.

As an example, let’s say you have a scene where there are some people outside hiking and want to brighten the sky in behind them. With the rectangle mask, you can draw a shape over the sky, soften the edges, increase saturation and highlights, then subtract the separately masked subjects so skin and clothing do not shift in colour.

As a long-time user of Premiere, the AI Mask feature is a very welcome, and necessary edition, as DaVinci Resolve has had this feature (Magic Mask) for quite some time.

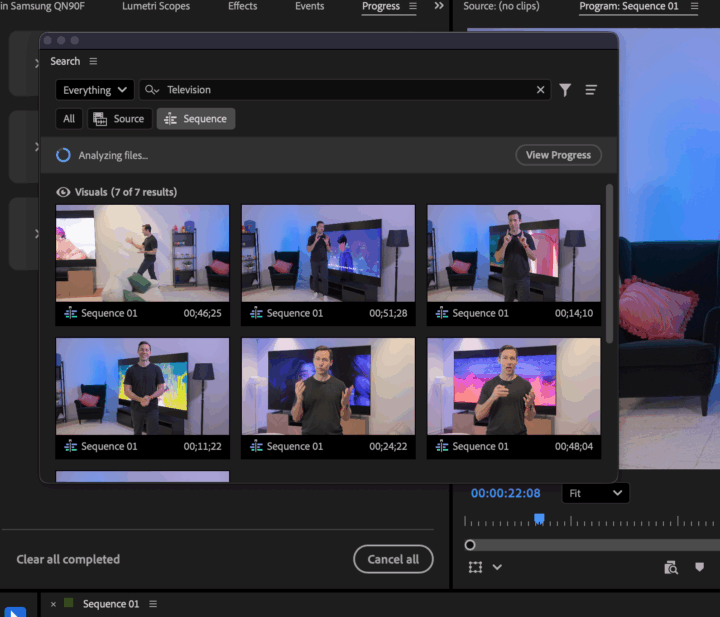

Search your media by what you can see and hear

Media Intelligence search has been expanded. Last year, Premiere could search based on:

- What was said

- Metadata

- Object recognition

Now, it also recognises sounds. That means you can look for clips where someone laughs, a car door slams or a dog barks. It can also find similar images to a frame you select. AI-processing is done locally upon import which keeps footage private.

You can choose whether to search in the bin or only the sequence you are working on which keeps things fast and relevant.

While sound is a welcome edition to Media Intelligence, you still don’t have the ability to train the system based on something specific in your video. Adobe says that this could be a feature in the future.

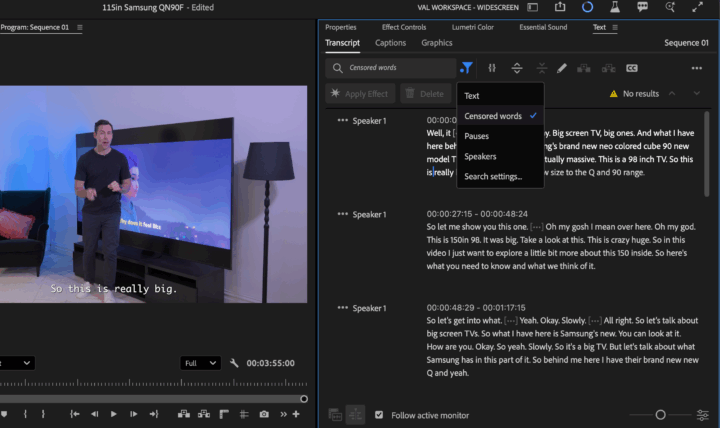

Auto Bleeping keeps content safe for publishing

You can now search for specific words and censor them automatically. This is an addition to the audio-transcription system, making it possible to bleep out profanity, names, brands or anything sensitive. You select a replacement sound like a beep, quack or dog bark, or bring in your own.

Once reviewed, you can choose to mute or delete instead. It is a simple tool for creators publishing to platforms with strict content rules.

Premiere mobile gets YouTube Shorts tools

While the Premiere desktop app does not yet have YouTube integration, Adobe highlighted the new mobile workflow. You can tap “Edit in Adobe Premiere” inside YouTube Shorts and open a dedicated creative space with exclusive effects, transitions and templates. There’s also a button to do this in the new Premiere for mobile edition. Finished clips can be shared back to YouTube in one tap.

Valens Quinn attended the AdobeMAX conference in Los Angeles as a guest of Adobe Australia.